Although GPT-4o as a technology is a couple of years old, it raised another round of attention in November. Right after OpenAI, GPT-4o developers announced a breakthrough with ChatGPT. If you’ve missed all the fuss for any reason, we’ll shortly describe what it is and why everyone talks about it.

Yet, our primary focus today will be on GPT-4o, alternative solutions you can use for free. So, let’s start with the basics and proceed to the overview of open-source analogues for this buzzy technology.

What is GPT-4o?

The GPT-4o model (short for Generative Pretrained Transformer) is an artificial intelligence model that can produce literally any kind of human-like copy.

GPT-4o is OpenAI’s flagship multimodal model, introduced in May 2024. The “o” stands for “omni,” reflecting its ability to process and reason across text, images, and audio seamlessly, all within a single model architecture.

It represents a leap forward from GPT-4, especially in speed and responsiveness, particularly for real-time voice and vision tasks.

Unlike earlier models that handled each modality separately or in a pipeline, GPT-4o can interpret a diagram, listen to a question, and respond conversationally in a single, unified interaction.

While the exact parameter count has not been disclosed, GPT-4o is considered a major architectural and efficiency upgrade over its predecessors.

What Is ChatGPT?

ChatGPT is a chatbot that can answer questions, imitating a dialogue.

It’s designed to provide natural, interactive dialogue with users and can handle a wide range of tasks from writing and research to tutoring and coding assistance. ChatGPT’s latest versions integrate multimodal capabilities (through GPT-4o), allowing users to upload images, interact via voice, and receive responses that incorporate text, audio, or visual understanding.

It’s available through a web interface and mobile apps, with both free and subscription-based tiers.

Some say (including OpenAI CEO, Sam Altman) that it can even take down Google over time. For more context, check out the video.

Top 9 free GPT-4o alternative AI models

Now that you’ve got an idea of what the technology we are talking about is, let’s move on to the OpenAI GPT-4o competitors.

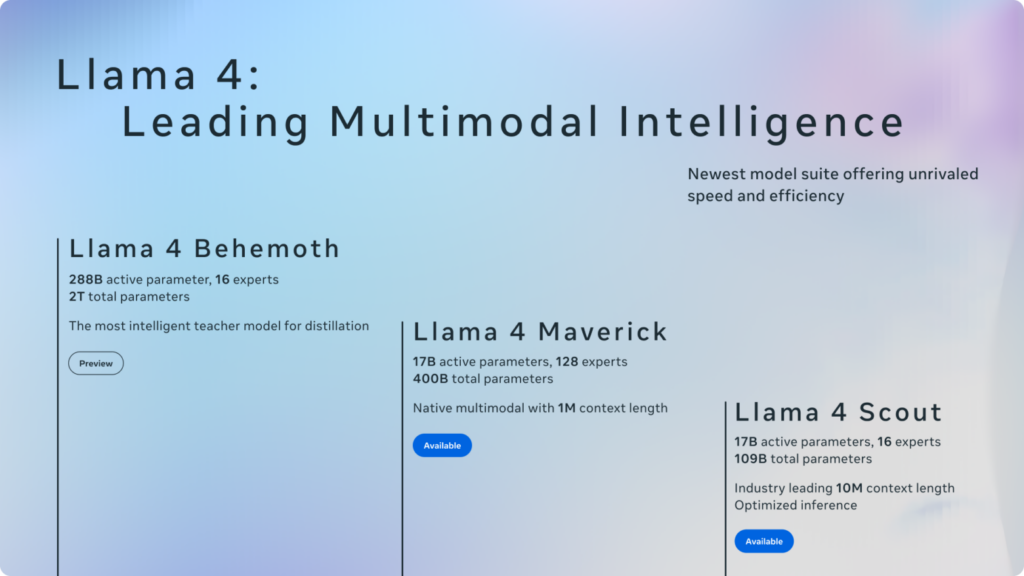

Llama 4 by Meta AI

Meta’s Llama 4 series represents the next evolution of open-weight language models, offering state-of-the-art performance across reasoning, code generation, and multilingual understanding.

The flagship variant, Llama 4 Maverick, delivers GPT-4-class capabilities using fewer active parameters, emphasising architectural efficiency and fine-tuned task alignment. With its broad availability and strong ecosystem support, Llama 4 is more than just a model—it’s an infrastructure for research, product development, and advanced AI deployment.

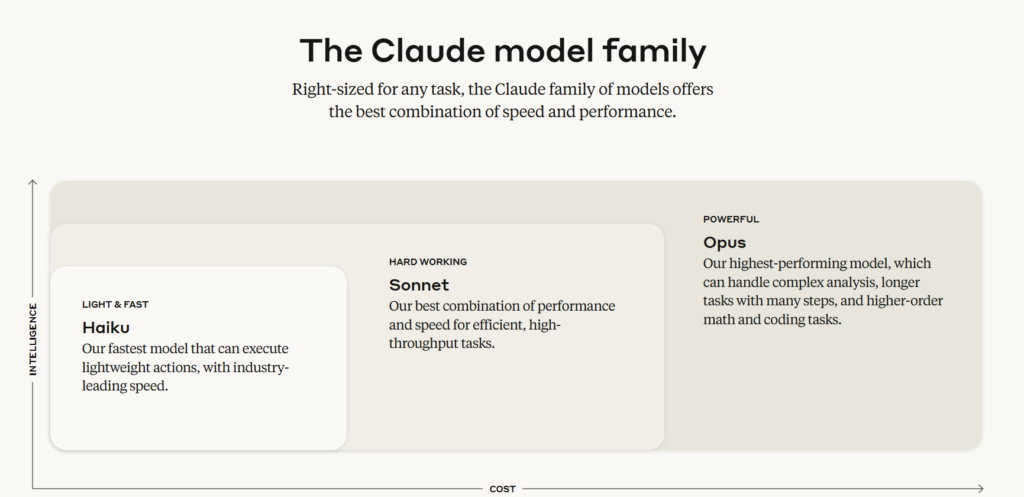

Claude by Anthropic

Claude stands out as a high-performing, human-centric language model engineered with safety, reliability, and conversation depth at its core.

Developed by Anthropic, it offers robust capabilities in coding, document analysis, and open-domain dialogue while prioritising ethical AI behaviour. Claude’s balanced tone, contextual memory, and polished user experience make it particularly suited for business, education, and decision-support scenarios. It’s less a chatbot, more a collaborative partner.

Gemini by Google

Gemini is Google’s answer to the modern AI stack—fusing deep language understanding with live web access, real-time citation, and native compatibility with tools like Docs, Sheets, and Search.

Building on the legacy of PaLM and LaMDA, Gemini excels at multi-step reasoning, fact-grounding, and multimodal input. Whether you’re building with Bard or deploying through Vertex AI, Gemini offers an extensible platform for reliable, contextual, and research-focused language tasks.

DeepSeek

DeepSeek is quickly carving a niche in the AI landscape with large language models that combine competitive performance with cost efficiency.

Offering models trained on a blend of code and natural language, DeepSeek is particularly strong in technical writing, program synthesis, and creative ideation. Its open availability and thoughtful training approach make it a favourite among developers and startups looking for serious capability without the scale of Big Tech budgets.

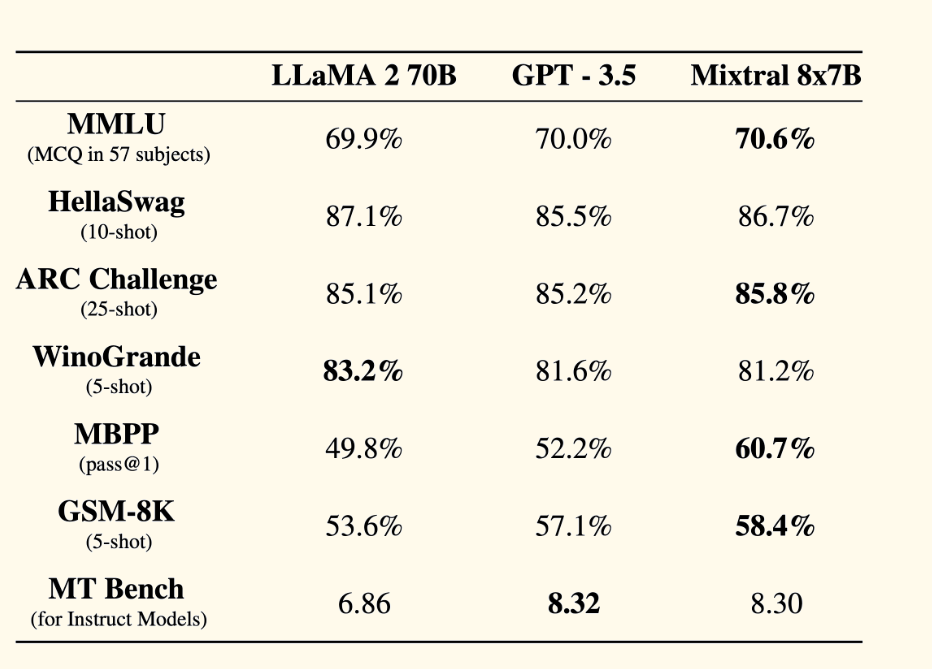

Mistral Mixtral 8x7B

Mixtral 8x7B is a mixture-of-experts model released by French startup Mistral AI. It activates only two of eight expert networks during inference, striking a strong balance between performance and computational efficiency.

Though not multimodal, Mixtral offers top-tier language capabilities in an open-weight format—comparable to GPT-3.5 and even approaching GPT-4 on some benchmarks.

It’s particularly suited for product teams who want transparency, self-hosting, and a fast, performant base model to fine-tune or adapt. Unlike GPT-4o, however, Mixtral lacks native vision or audio support.

xAI’s Grok

Grok is a family of large language models developed by xAI, integrated directly into the X (formerly Twitter) platform. Positioned as a GPT-4 alternative, Grok is designed to provide real-time reasoning over social media data, with access to live trending topics and contextual content.

Grok-1.5, its latest version, shows competitive performance on benchmarks like MMLU and HumanEval, though its multimodal capabilities are still limited. Its standout feature is real-time integration with the X platform.

Command R+ by Cohere

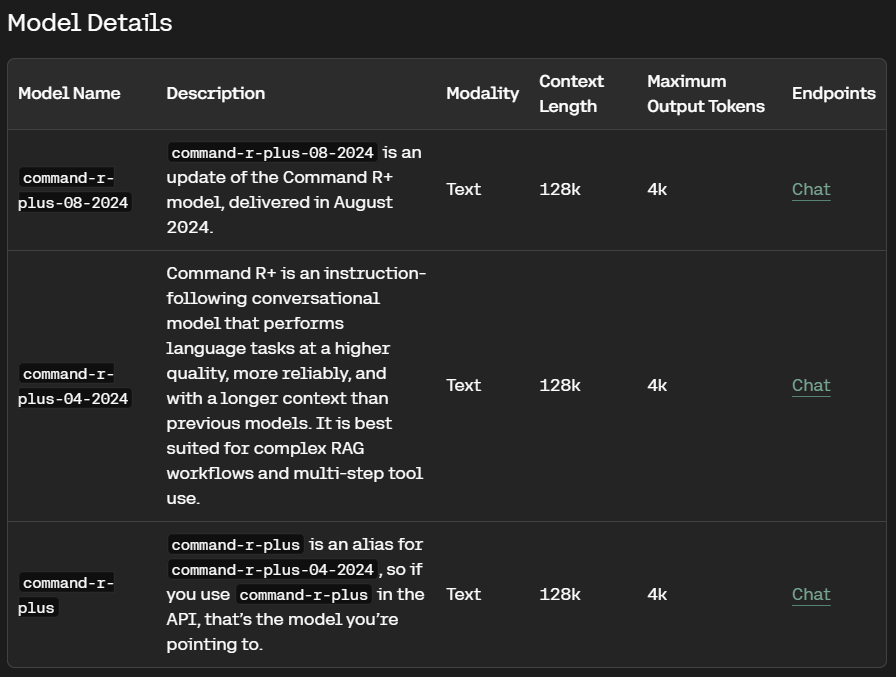

Cohere’s Command R+ is a state-of-the-art open-weight model optimised for retrieval-augmented generation (RAG).

Unlike GPT-4o, it’s not multimodal and does not handle vision or audio tasks. However, its performance in document understanding, summarisation, and structured reasoning makes it a strong choice for enterprise and knowledge-heavy applications.

Released in 2024, Command R+ is one of the few open models optimised explicitly for RAG scenarios, where external documents are injected into the model’s context to improve factual accuracy and recall.

Yi 34B by 01.AI

Yi 34B is a powerful open-weight language model released by 01.AI, a Chinese AI startup led by Kai-Fu Lee.

It outperforms many larger models on key language benchmarks and is particularly strong in multilingual tasks, including Chinese and English. Yi-34B is not multimodal, but it supports high-performance instruction following and reasoning.

Its architecture makes it suitable for fine-tuning or embedding into downstream applications, especially for users looking for a strong open model that can be hosted or modified without licensing restrictions.

Mamba (State Space Models)

Mamba introduces a new state space model (SSM) architecture designed as an alternative to transformers. Unlike autoregressive LLMs like GPT-4o, Mamba excels at efficient long-context processing, offering significant gains in memory and compute use for sequence-based tasks.

While still early in adoption and not as performant in general-purpose reasoning, Mamba represents a radical architectural innovation with strong potential in high-throughput or low-latency environments. It’s not multimodal yet, but it serves as a research-forward alternative in emerging LLM design.

[Bonus] 3 additional GPT-4o alternative models worth attention

While not as popular, these are the strong alternatives for the GPT-4o model. Let’s review what makes them stand out among the competitors.

Falcon 180B by Technology Innovation Institute

Falcon 180B is one of the largest fully open-weight language models publicly available. Developed by the Technology Innovation Institute (TII), Falcon delivers impressive reasoning, multilingual understanding, and instruction-following capabilities on par with many proprietary models.

Its architecture is designed for efficient training and inference, making it a powerful choice for enterprises seeking an open-source, GPT-4-level alternative without licensing constraints.

OpenAssistant by LAION

OpenAssistant is a community-driven, open-source conversational AI initiative designed to provide a free alternative to proprietary chatbots.

It builds upon large language models fine-tuned on extensive instruction-following datasets curated by the LAION community. While it may not match GPT-4o’s scale, OpenAssistant emphasises transparency, accessibility, and continuous improvement through collaborative efforts.

RWKV by RWKV Foundation

RWKV offers a novel transformer-recurrent hybrid architecture that combines the strengths of transformers and RNNs. This design allows RWKV models to scale efficiently while maintaining strong language understanding and generation abilities.

While still emerging compared to mainstream transformers, RWKV’s open weights and unique efficiency profile make it a promising alternative for those prioritising lower compute resources and faster inference times.

To sum it up

For now, we can observe a variety of the best AI tools and the breakthroughs they make in natural language processing, understanding, and generation. We’ll definitely see even more models of different types coming up in the near future.

Here at Altamira, we believe that any technology should be used responsibly, especially AI, which is so powerful but understudied. That’s why, as an AI development company (among other services we provide), we stick to that responsibility to prevent possible manipulation or misuse.

We also aim to enable businesses of all sizes to scale much faster, deliver an enriched customer experience, and increase revenue. Harnessing the potential of artificial intelligence allows us to achieve those goals much faster.

Whether it’s natural language processing, predictive maintenance, chatbots, or any other AI-driven software development, we’ve got you covered.

Discover how our AI development services can help you take your business to new heights

Our team will help you learn the details.

Contact usFAQ

GPT-4o is a version of OpenAI’s GPT-4 model designed to handle text, image, and audio inputs all in one system. The “o” stands for “omni,” meaning it can process different types of information together, like reading a diagram while you’re asking about it. It’s faster and more responsive than previous versions, especially in voice and vision tasks.

Yes, to a point. GPT-4o is available for free in ChatGPT, but with some usage limits. You’d need a ChatGPT Plus subscription if you want more access (like higher usage caps or additional tools). So you can try it out without paying, but the free tier has guardrails.

Several AI tools offer a good experience for students. Perplexity.ai stands out for quick research and sourcing. You.com has AI tools bundled with search. And Claude (by Anthropic) is often praised for its polite, detailed answers. Many students also use tools like Notion AI or Grammarly for writing help, depending on the task.

If you’re exploring beyond ChatGPT, try Claude, Gemini (from Google), or Mistral (open-source). Each has its strengths—Claude is known for careful, thoughtful replies, while Gemini is integrated with Google services. Open-source models like Mistral or LLaMA can also be used directly if you’re into customising your setup.