Table of Contents

During the 2010s, the extensive integration of machine learning, driven by big data and AI technology developments, introduced fresh ethical dilemmas, ranging from bias and transparency to personal data management. As a result, AI ethics emerged as a separate field, with technology companies and AI research organisations taking proactive steps to run their AI initiatives responsibly.

In this article, you will learn the following:

- Definition of responsible artificial intelligence

- Key pillars of responsible AI

- Practices for implementation of responsible AI

- Benefits of ethical AI

The responsible AI and its meaning

Responsible AI, also known as ethical AI, covers a set of principles and normative guidelines. These documents regulate the development, deployment, and governance of artificial intelligence systems, ensuring compliance with ethical standards and legal requirements. In a nutshell, organisations seek responsible AI to establish a framework that outlines predefined principles, ethics, and rules to guide their AI initiatives.

Nowadays, businesses more often use diverse ML models to automate and enhance their routine tasks that previously relied on human intervention.

At the same time, an ML model is an algorithm that undergoes pre-training using data to generate predictions. The obtained predictions facilitate the decision-making process, as the scope of these findings spans from loan evaluation applications, and abnormal behaviour detection to fraudulent activity detection.

AI models and the data they rely on are far from flawless. They can produce both intended and unintended negative outcomes, not just positive ones.

For example, Amazon began using an AI-powered recruiting tool to check new job candidates.

The tool was intended to scan the resumes submitted to Amazon and find patterns to help identify the best candidates at scale. However, the tool was skewed towards men, correlated with gender imbalances. As a result, the system began to decline resumes from women.

By taking a responsible approach, companies can:

- Deploy AI systems that are compliant with regulations

- Ensure that development processes consider ethical, legal, and societal implications

- Address and mitigate bias in AI models

- Foster trust in AI

- Minimise the negative effects of AI

- Clarify accountability in case of AI-related issues

Responsible AI is not merely a buzzword or a theoretical concept. Major technology companies like Google, Microsoft, and IBM have advocated for the regulation of artificial intelligence and have established their frameworks and guidelines to govern it.

Google’s CEO Sundar Pichai noted: “Artificial intelligence is like climate change in that it will proliferate worldwide and that people across the globe share a responsibility to create guardrails. That’s why we have to start building the frameworks globally“.

This leads us to the core principles of responsible artificial intelligence.

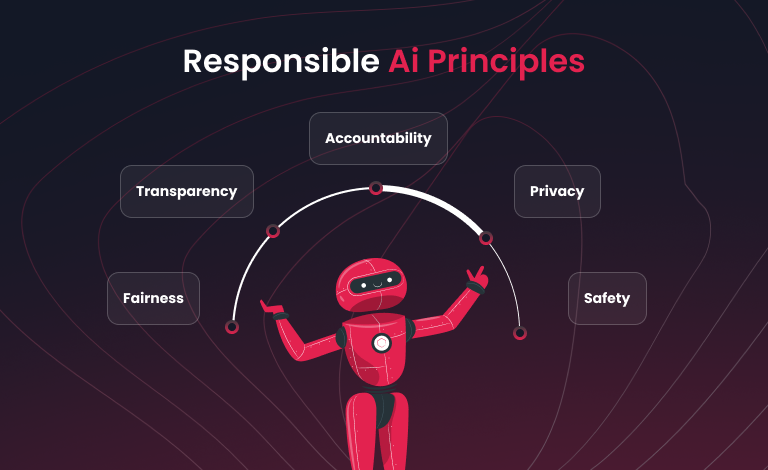

Key principles of responsible AI

Responsible AI frameworks seek to reduce or minimise the risks and threats posed by machine learning.

Fairness

AI systems must ensure fairness by treating all individuals equally and avoiding disparate impacts on similar groups. Thus, these systems should be free from bias.

While humans often exhibit bias in their judgments, computer systems are believed to have the potential to make fairer decisions. However, remember that machine learning model is trained on real-world data, which is often biased. This can result in unfair outcomes, systematically downgrading certain groups based on race, gender, or sexual orientation.

To achieve algorithmic fairness, consider the following steps:

- Investigate biases and their origins in your data

- Assess and document the potential impacts and behaviours of the technology

- Define what fairness means for your model in various scenarios

- Revise training and testing data based on user feedback and usage patterns.

Security

AI systems must protect private information and be resilient against attacks, similar to other forms of technology.

Machine learning models use training data to make predictions on new inputs. This data is often sensitive, particularly in industries like finance or healthcare. For example, MRIs contain patient information, known as PHI. When using patient data for AI applications, companies must undertake additional preprocessing steps, such as anonymisation and de-identification, to comply with HIPAA regulations, which protect the privacy of health records in the US. In Europe, these concerns are managed by the General Data Protection Regulation (GDPR).

- Develop a data management strategy for responsible data collection and management

- Implement access control mechanisms

- Use on-device training where appropriate

- Advocate for the privacy of machine learning models.

Reliability

AI systems must function reliably, safely, and consistently both under normal conditions and in unexpected situations.

Organisations need to create AI systems that deliver strong performance and are safe to use while minimising any negative impacts. Consider what might happen if something goes wrong with an AI system. How will the algorithm react in an unforeseen scenario? An AI system can be deemed reliable if it effectively handles all “what if” situations and responds appropriately to new circumstances without detriment to users.

To ensure AI reliability and safety, you should:

- Evaluate would-be scenarios and determine how the system should respond to them

- Develop strategies for timely human intervention if something goes wrong

- Prioritise human safety above all

Transparency

People need to understand how AI systems make decisions, mainly when those decisions affect their lives.

AI systems are often under wraps, making it challenging to explain their inner workings and mechanisms. People sometimes struggle to explain their own decisions, so understanding complex AI systems like neural networks can be difficult.

While you may have your own ideas on achieving transparency and explainability in AI, here are some practices to consider:

- Establish interpretability criteria and include them in a checklist

- Clearly define the types of data AI systems utilise, their intended purposes, and the factors influencing their outcomes

- Document the system’s behaviour throughout the development and testing stages

- Provide clear explanations of how the model functions to end-users

- Outline procedures for correcting errors made by the system

Learn more about data migration and modernisation: trends, drivers, benefits, and price

Accountability

Individuals have to maintain responsibility and oversight over AI systems.

Lastly, all parties involved in the creation of AI systems bear the ethical responsibility for their proper use and prevention of misuse. A company has to clearly outline the roles and responsibilities of those accountable for adherence to established AI principles.

The complexity and autonomy of an AI system directly correlate with the level of responsibility it entails. The more autonomous an AI system becomes, the greater accountability rests upon the organisation developing, deploying, or using it. This amplified accountability stems from the possible vital impact on people’s lives and safety.

Implement responsible AI: tips and practises

Implementing responsible AI guidelines at the enterprise level requires a holistic approach covering all AI development and deployment stages.

Establish company-wide responsible AI principles

Consider the critical principles of responsible AI, which should be upheld by a dedicated interdisciplinary team focused on AI ethics. This team should include members from various departments, such as AI specialists, ethicists, legal advisors, and business executives.

Raise your team’s awareness

Implement training initiatives and workshops to educate employees and decision-makers on responsible AI practices. Promote learning about potential biases, ethical implications, and the significance of integrating responsible AI into business practices.

Integrate ethics across the AI development lifecycle

Integrate responsible AI practices throughout the entire AI development process, spanning from data gathering and model training to deployment and continuous monitoring. Implement methodologies to detect and reduce biases in AI systems. Conduct regular evaluations of models to ensure fairness, particularly concerning sensitive attributes like socioeconomic status, race, and gender. Emphasise transparency by ensuring AI systems are explainable. Offer documentation detailing data origins, algorithms used, and decision-making processes. Users and stakeholders should be able to understand the decision-making mechanisms of AI systems.

Find more about AI adoption framework: technology/privacy/ security/data point of view and EU AI

Protect user privacy

Set up reliable data and AI governance protocols to safeguard user privacy and sensitive data. Clearly articulate policies governing data usage, secure informed consent and adhere strictly to data protection regulations.

Facilitate human oversight

Incorporate systems for human oversight in key decision-making processes. Establish distinct lines of accountability to ensure that responsible individuals can be identified and held accountable for AI systems’ results. Implement continuous monitoring of AI systems to detect and resolve ethical concerns, biases, or emerging issues. Conduct regular audits of AI models to evaluate adherence to ethical standards.

Encourage external collaboration

Encourage partnerships with external organisations, research institutions, and open-source communities dedicated to responsible AI. Stay updated on advancements in responsible AI practices and initiatives, actively participating in industry-wide endeavours to contribute knowledge and promote best practices.

The benefits of responsible AI systems

Adopting ethical AI practices can enhance business success. While technology drives business growth, the manner in which it is employed greatly influences customer relationships and corporate reputation. Here are some key advantages of ethical AI in business.

Improved brand trust and reputation

Integrating ethical AI practices fosters trust by maintaining transparency and informing customers about their data usage. When people subscribe to your email marketing, they seek clarity on how their email addresses will be used. For example, informing customers that their emails may be used to send product recommendations helps build trust and encourages them to feel comfortable sharing their sensitive information.

Mitigated legal and reputational risks

Implementing responsible artificial intelligence mitigates the potential for legal disputes and reputational risks. Today, AI developers face lawsuits for copyright violation due to using copyrighted content and images in their training data.

Furthermore, neglecting ethical AI practices can tarnish a company’s reputation. For example, if AI tools used in learning processes exhibit bias, the software provider may be perceived as biased by extension.

Enhanced customer loyalty

Ethical AI enhances customer satisfaction and loyalty. Customers generally support AI usage as long as it is done responsibly. For example, AI can offer personalised product recommendations, aiding customers in discovering items they are likely to buy.

This personalised approach has been proven to boost sales and customer satisfaction. Nevertheless, customers must understand how their data is used further. Ethical AI principles require transparency about data usage, management, and protection, even when it is used to enrich their shopping experience.

The final words

There are many reasons to be hopeful about a future where responsible AI tools improve human lives. AI is already making significant advances in healthcare, education, and data analysis. By being ethically designed, it can blend technological innovation with fundamental human values, leading to a thriving, inclusive, and sustainable global community.

Our team helps you adopt industry-leading practices to smoothly integrate AI and ML solutions into your business. Our holistic approach ensures optimised performance, scalability, and tangible results from your AI investments.

What areas does the AI adoption framework cover?

- Ethical concerns and risk management

- Data quality, availability, accessibility, and management

- Data protection and privacy

- Legal and regulatory obligations (EU AI Act)

- Continuous effectivity improvement

- Cost effectivity, sustainability, and total cost of ownership

Get in touch to get a free expert consultancy.