Table of Contents

How do we identify objects and people around us or in images? Essentially, our brains rely on detecting features to classify the objects we observe.

You’ve likely experienced countless moments in your life where you looked at something, initially identified it as one thing, and then, upon closer inspection, realised it was something entirely different.

In such cases, your brain quickly detects the object, but due to the brief glance, it doesn’t process enough features to categorise it accurately.

For example, here. Do you see a rabbit or a duck?

The main point is that your brain identifies objects in an image by first detecting their features.

Thus, your perception depends on the lines and angles your brain chooses as the starting point for its “feature detection” process.

What is feature detection and description?

Feature detection and description are all-important concepts in computer vision, needed for tasks like image recognition, object tracking, and image stitching. These principles allow computers to identify unique and informative elements within an image, facilitating the analysis of visual data.

This process involves pinpointing specific areas or structures in an image that are worth attention and can serve as reference for further analysis. These features are typically defined by their distinctiveness, consistency, and resilience to changes in lighting, rotation, and scale. Commonly detected features include corners, edges, blobs, and key points.

Here’s why feature detection is important in computer vision:

- Compact representation: Images contain a large amount of data, which makes direct analysis and comparison difficult. Features are distinctive patterns or structures within an image that can be represented with fewer data points, facilitating more efficient image processing and analysis.

- Robustness to variability: Images differ due to factors like lighting, perspective, scale, rotation, and occlusion. Features that remain consistent despite these variations are important for reliable image analysis. For example, a clearly defined corner should still be identifiable as a corner even if the image is rotated or the lighting changes.

- Matching and recognition: Feature detection and description enable the matching of corresponding features in different images. Computer vision systems can discern relationships and establish meaningful connections by identifying shared features across images.

- Object tracking and motion analysis: In fields like surveillance, autonomous vehicles, and robotics, feature detection helps to track objects across frames and analyse their motion patterns. Effective feature tracking lets systems estimate object velocities, predict their paths, and make decisions based on observed behaviour.

- Image registration: In areas such as medical imaging and remote sensing, feature detection aligns, and registers images taken at different times or from various sensors. This alignment allows for precise comparison and analysis of changes over time or between different data sources.

- 3D reconstruction: Features are required for creating 3D models from multiple 2D images (stereo vision) or depth information. By identifying matching features in different images, the relative positions of cameras and scene objects can be determined, thereby reconstructing 3D scenes.

- Image stitching and panorama creation: When creating panoramic images from multiple overlapping photos, feature detection is needed to identify corresponding points across the overlapping areas. These identified points are then used to align and seamlessly stitch the images together.

- Local information detection: Features denote localised patterns within an image, characterising specific regions of interest. This capability proves valuable for extracting details from scenes, detecting objects within images, and analysing texture variations.

- Computational efficiency: Concentrating on pertinent features rather than analysing the entire image minimises computational workload and memory usage. This optimisation is particularly significant in real-time applications where operational efficiency plays a key role.

Learn what are the widely used AI/ML models in image recognition.

What is a neural network?

A neural network is a type of machine learning model designed to mimic the decision-making processes of the human brain. It operates by simulating the interconnected behaviour of biological neurons to recognise patterns, evaluate options, and make decisions.

Neural networks depend on training with data to enhance their accuracy step-by-step. Once optimised, they become potent assets in fields like computer science and artificial intelligence, enabling rapid classification and clustering of data. Tasks such as speech or image recognition, which once required hours for manual analysis, can now be completed in minutes. Google’s search algorithm is one of the most prominent examples of neural network applications.

Types of neural networks for feature detection

Feature detection in neural networks is paramount for numerous applications, including image and speech recognition, natural language processing, and more. Here are some widely used neural networks for feature detection.

Convolutional Neural Networks (CNNs)

- Architecture: CNNs are composed of layers like convolutional layers, pooling layers, and fully connected layers.

- Applications: Image and video recognition, image classification, medical image analysis, and object detection.

- Examples: AlexNet, VGGNet, ResNet, Inception, MobileNet.

Recurrent Neural Networks (RNNs)

- Architecture: RNNs have a loop allowing information to persist, using hidden states to remember previous inputs.

- Applications: Time-series prediction, natural language processing, and speech recognition.

- Variants: Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU).

Autoencoders

- Architecture: Composed of an encoder to map the input to a lower-dimensional space and a decoder to reconstruct the input.

- Applications: Dimensionality reduction, denoising, anomaly detection, and unsupervised feature learning.

- Variants: Variational Autoencoders (VAEs), Sparse Autoencoders.

Generative Adversarial Networks (GANs)

- Architecture: Consists of two networks, a generator, and a discriminator, competing against each other.

- Applications: Image generation, image-to-image translation, and super-resolution.

- Examples: DCGAN, StyleGAN, CycleGAN.

Transformers

- Architecture: Uses self-attention mechanisms to weigh the influence of different input parts.

- Applications: Natural language processing, text generation, and feature detection from sequences.

- Examples: BERT, GPT, T5, Vision Transformers (ViT).

Region-based Convolutional Neural Networks (R-CNNs)

- Architecture: Combines region proposals with CNNs for object detection.

- Applications: Object detection and segmentation.

- Variants: Fast R-CNN, Faster R-CNN, Mask R-CNN.

YOLO (You Only Look Once)

- Architecture: Uses a single CNN to predict multiple bounding boxes and class probabilities for objects in images.

- Applications: Real-time object detection.

- Versions: YOLOv1, YOLOv2, YOLOv3, YOLOv4, YOLOv5.

These neural networks have been widely adopted in both academic research and industry due to their ability to learn and detect complex features from large datasets.

Feature detection limitations

Admit it, with advances in technology, we can still observe inherent limitations in feature detection. Let’s explore the boundaries that define these limitations, shedding light on the key challenges of identifying and interpreting features in various applications. Here are some of the limitations of feature detection.

- Challenges with scale and rotation invariance: Many traditional feature detection algorithms need help with handling variations in scale and rotation. While some approaches try to mitigate these issues, achieving true scale and rotation invariance remains a significant challenge.

- Focus on local information: Most feature detection algorithms primarily capture information within a local area around a point. This localised focus can be restrictive in scenarios where we need to understand the global context for accurate analysis.

Discover what is the price forecasting with Artificial Intelligence & Machine Learning technology

- Ambiguity and consistency: Identifying distinctive and consistently detectable features across different images poses difficulties. Some features may exhibit ambiguity or lack repeatability under varying lighting conditions or perspectives.

- Impact of noise sensitivity: Feature detection is susceptible to noise, potentially resulting in false positives or missed detections. In images with high noise levels, wrong feature points may be detected, adversely affecting subsequent data processing.

- Specialisation in feature types: Various feature detection techniques are tailored to specific types of features, such as corners, edges, or blobs. Selecting the appropriate method depends on the application’s requirements and the specific features targeted for detection.

- Semantic understanding limitations: Features typically represent low-level visual patterns and may not encompass higher-level semantic information. While effective for matching and registration tasks, they often fall short in providing deep content understanding.

- Challenges with object occlusion: Object occlusion can result in missing or inaccurate feature matches, especially when significant portions of feature points are obscured.

- Impact of light variability: Many feature detection algorithms are sensitive to changes in lighting conditions. These variations can distort feature detection, making it almost impossible to match features accurately.

Applications of feature detection

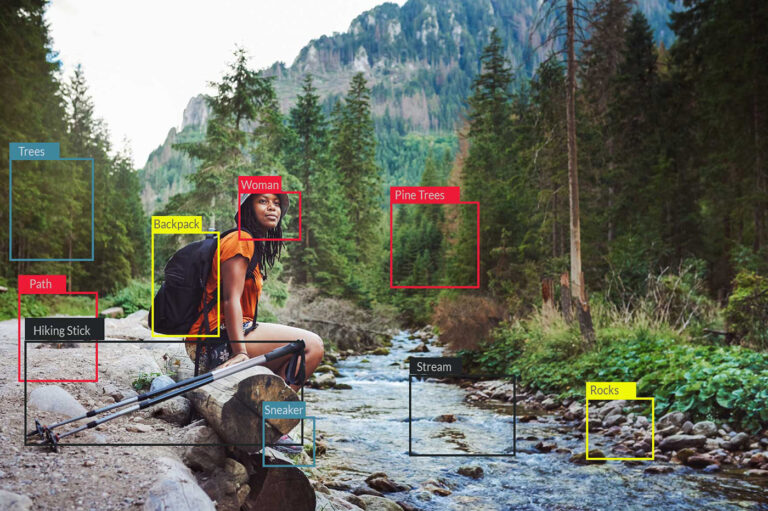

Image recognition

No doubt about it. Feature detection plays an important role in image recognition tasks such as image classification, object detection, and semantic segmentation. By identifying and extracting meaningful features from images, systems can accurately categorise objects and scenes, enabling applications in automated surveillance, medical imaging, and augmented reality.

Wonder what is the real cost of Artificial Intelligence in 2024? Read more.

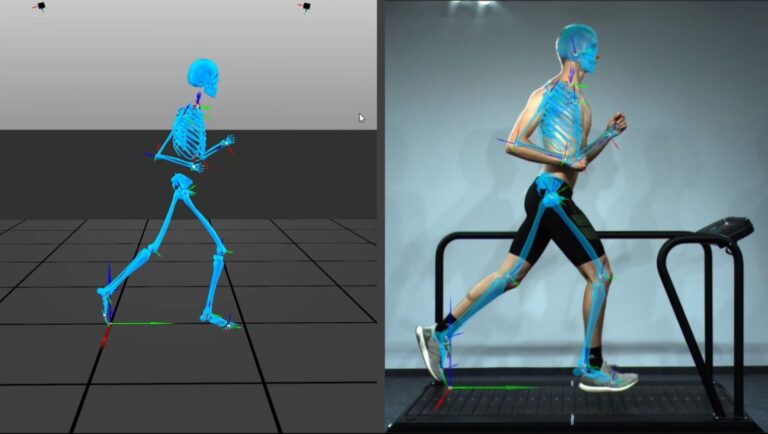

Motion analysis

In fields like surveillance, autonomous vehicles, and healthcare, feature detection is instrumental in tracking objects and analysing motion patterns. Algorithms that detect and track key features across video frames allow systems to monitor movement, predict trajectories, and make informed decisions in real-time scenarios.

Medical imaging

Feature detection helps align and compare images to facilitate disease diagnosis and treatment planning. By extracting and analysing features from medical scans such as MRI and CT, healthcare professionals can detect abnormalities, monitor disease progression, and personalise treatment strategies.

Read more: What is responsible AI?

The final words

Feature detection developments, particularly advancements in deep learning, continue to drive forward across industries. Feature detection techniques improve the accuracy and efficiency of computer vision systems as well as pave the way for new advancements in fields like healthcare, autonomous navigation, and industrial automation.

In a nutshell, neural networks play a key role in feature detection by using their distinct architectures and capabilities. From CNNs for spatial hierarchies to transformers for long-range dependencies, each type offers unique advantages in extracting and analysing features from complex data.

Build an image recognition solution from scratch or upgrade your existing system with Altamira.

Explore our full range of services: